We’re back again for 2025! Here’s the familiar prompt supplied to ChatGPT now running the 5.1 model:

Draw a Christmas tree using SwiftUI

Expand to see the code.

import SwiftUI

struct ChristmasTreeView: View {

var body: some View {

ZStack {

Color.black.opacity(0.9)

.ignoresSafeArea()

VStack(spacing: 0) {

// Star

StarShape(points: 5)

.fill(Color.yellow)

.frame(width: 40, height: 40)

.shadow(radius: 5)

.padding(.bottom, 8)

// Tree (3 triangles)

TreeLayer(color: .green, scale: 1.0)

TreeLayer(color: .green, scale: 1.2)

TreeLayer(color: .green, scale: 1.4)

// Trunk

Rectangle()

.fill(Color.brown)

.frame(width: 30, height: 50)

.padding(.top, -10)

}

.overlay(OrnamentsView())

}

}

}

// MARK: - Tree layer (triangle)

struct TreeLayer: View {

let color: Color

let scale: CGFloat

var body: some View {

Triangle()

.fill(

LinearGradient(

gradient: Gradient(colors: [color.opacity(0.8), color]),

startPoint: .top,

endPoint: .bottom

)

)

.frame(width: 160 * scale, height: 100 * scale)

.shadow(radius: 5)

.offset(y: -20 * (scale - 1)) // stack layers nicely

}

}

struct Triangle: Shape {

func path(in rect: CGRect) -> Path {

var path = Path()

path.move(to: CGPoint(x: rect.midX, y: rect.minY)) // top

path.addLine(to: CGPoint(x: rect.minX, y: rect.maxY)) // bottom left

path.addLine(to: CGPoint(x: rect.maxX, y: rect.maxY)) // bottom right

path.closeSubpath()

return path

}

}

// MARK: - Star shape

struct StarShape: Shape {

var points: Int

func path(in rect: CGRect) -> Path {

guard points >= 2 else { return Path() }

let center = CGPoint(x: rect.midX, y: rect.midY)

let radius = min(rect.width, rect.height) / 2

let innerRadius = radius * 0.4

var path = Path()

let angleStep = .pi * 2 / Double(points * 2)

var angle = -Double.pi / 2 // start pointing up

var firstPoint = true

for i in 0 ..< points * 2 {

let r = (i % 2 == 0) ? radius : innerRadius

let x = center.x + CGFloat(cos(angle)) * r

let y = center.y + CGFloat(sin(angle)) * r

if firstPoint {

path.move(to: CGPoint(x: x, y: y))

firstPoint = false

} else {

path.addLine(to: CGPoint(x: x, y: y))

}

angle += angleStep

}

path.closeSubpath()

return path

}

}

// MARK: - Ornaments overlay

struct OrnamentsView: View {

var body: some View {

ZStack {

ornament(x: -50, y: -40, color: .red)

ornament(x: 40, y: -20, color: .yellow)

ornament(x: -30, y: 10, color: .blue)

ornament(x: 50, y: 30, color: .pink)

ornament(x: 0, y: -5, color: .orange)

}

}

private func ornament(x: CGFloat, y: CGFloat, color: Color) -> some View {

Circle()

.fill(color)

.frame(width: 14, height: 14)

.shadow(radius: 3)

.offset(x: x, y: y)

}

}

// MARK: - Preview

struct ChristmasTreeView_Previews: PreviewProvider {

static var previews: some View {

ChristmasTreeView()

}

}

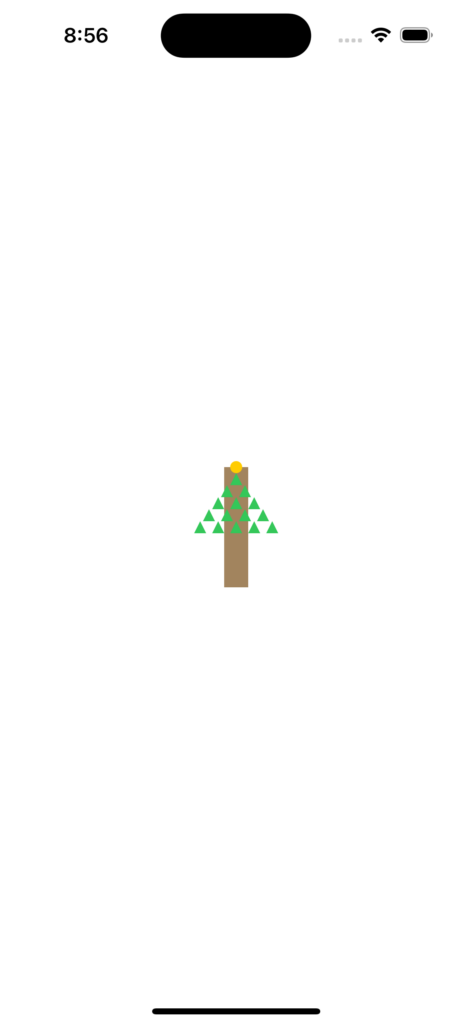

Have we already hit peak tree!? Was it decorated as lazily as possible because the model knows how I’d do it?

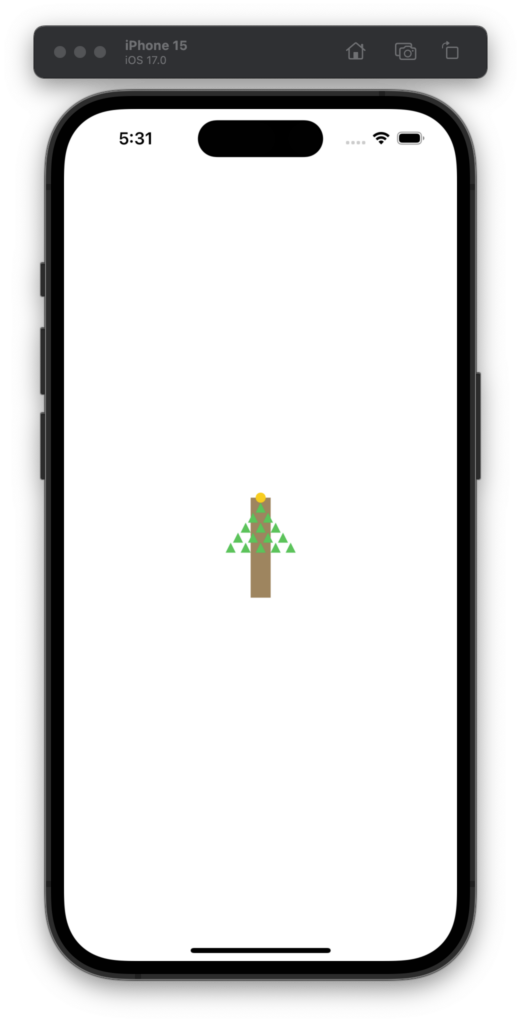

Visually things are similar, but maybe a little worse:

- The tree’s body looks more like three stacked triangles than one contiguous shape.

- Ornament distribution is clustered to the middle with one ornament attached to air.

- We still have a floating star.

- It tried to get fancy with some opacity and shadow tricks, but they fall a bit flat.

- Once again we have a new background color.

That said, this isn’t too terrible knowing that I didn’t give the model the opportunity to analyze its own visual output and retry.

Code-wise:

- ✅ It extracted more reusable views so the main body view is easier to understand.

- ✅ Made good use of

MARKannotations to increase readability. - ✅ Removed some global “magic numbers” (e.g. offsets) to enable adaptability to other canvas sizes.

- 🤔 Made the generation of the

StarShapepath a lot more complicated. It traded simplicity for “flexibility” with ultimately the same output.

Happy Holidays! 🎄🤖