“Because my garage door doesn’t need an operating system”

I admit – I went a little overkill with the Raspberry Pi garage door opener. A machine so complex was being used to do something so simple: Perform a button press. Why did it need graphics capabilities? A multicore processor? Cron jobs? It didn’t.

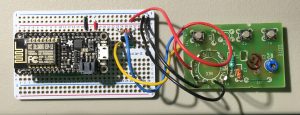

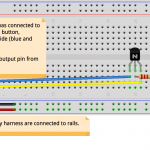

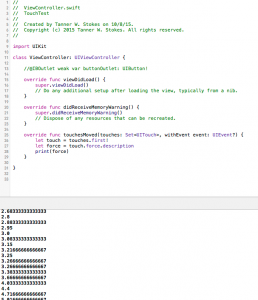

Enter the Adafruit Feather HUZZAH ESP8266. All the right junk in all the right places. A simple HTTP request to the Feather, and we’re good to go.

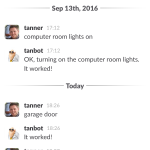

With the recent release of iOS 10 I took it a step further. Could I get this thing to work with Siri? As it turns out, it’s really not that hard: